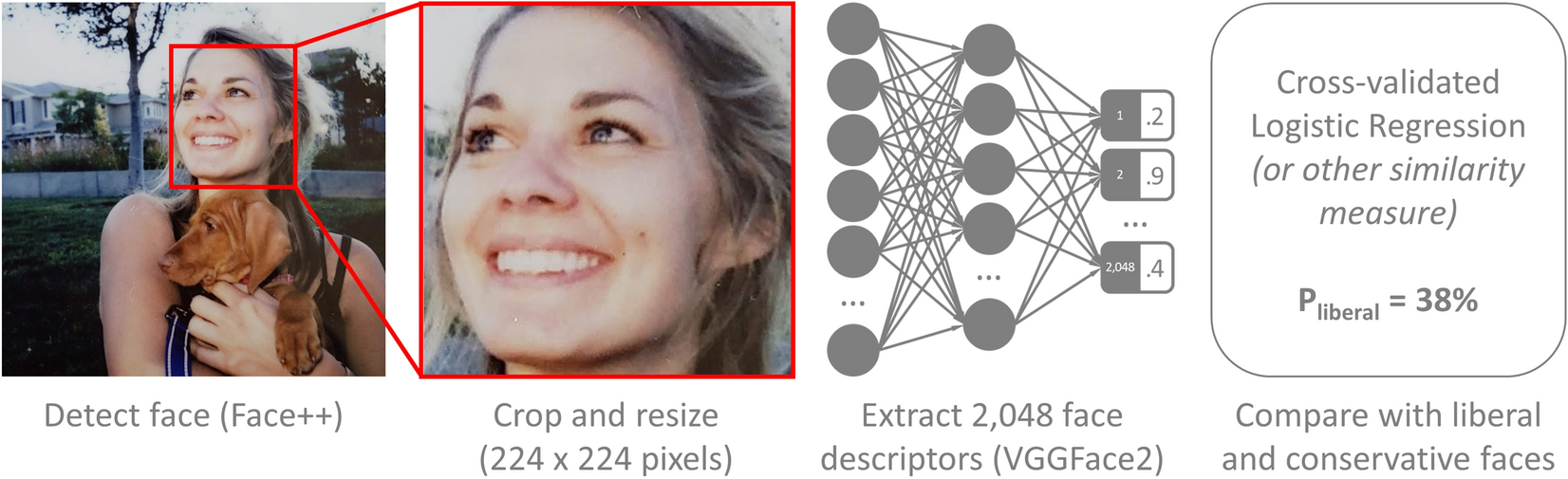

In a new paper called Facial recognition technology can expose political orientation from naturalistic facial image, researchers took a data set of roughly 1m pictures and were able to predict political orientation with an astonishing accuracy from just a single picture. The pictures were taken from Facebook and a dating site (I assume okcupid?). Using these images, authors were able to predict self-reported political orientation (conservative vs. liberal) with around 70% accuracy. This worked similarly for pictures from the UK, Canada and the US and also worked across samples (so you could train on pictures of British people on a dating site and get that accuracy for US people on Facebook). On the same data, humans had an accuracy of around 55%.

In order to avoid a large influence of the background on the classification decision, they cropped the image tightly around the face to remove unwanted background cues. Researchers also controlled for ethnicity, age and gender (as these correlate with political orientation) and only showed the algorithm people with matching characteristics. On these samples, accuracy only dropped by around 3.5%.

They also compared their algorithm to a 100 item psychological questionnaire. Using the questionnaire, they were only able to correctly predict political orientation with 66% accuracy.

Here, we use personality, a psychological construct closely associated with, and often used to approximate, political orientation27. Facebook users’ scores on a well-established 100-item-long five-factor model of personality questionnaire28 were entered into a tenfold cross-validated logistic regression to predict political orientation.

The results presented in Fig. 3 show that the highest predictive power was offered by openness to experience (65%), followed by conscientiousness (54%) and other traits. In agreement with previous studies27, liberals were more open to experience and somewhat less conscientious. Combined, five personality factors predicted political orientation with 66% accuracy—significantly less than what was achieved by the face-based classifier in the same sample (73%). In other words, a single facial image reveals more about a person’s political orientation than their responses to a fairly long personality questionnaire, including many items ostensibly related to political orientation (e.g., “I treat all people equally” or “I believe that too much tax money goes to support artists”).

This is astonishing – and potentially frightening. Note also that political orientation is only one of many things you could infer. In a different paper, a computer algorithm was able to predict sexual orientation with about 76% accuracy (87% with 5 pictues). You can fake answers on a psychology questionnaire and obscure your thoughts in verbal communication. Hiding your face is much harder.

Facial images can be easily (and covertly) taken by a law enforcement official or obtained from digital or traditional archives, including social networks, dating platforms, photo-sharing websites, and government databases. They are often easily accessible; Facebook and LinkedIn profile pictures, for instance, are public by default and can be accessed by anyone without a person’s consent or knowledge. Thus, the privacy threats posed by facial recognition technology are, in many ways, unprecedented.

This, presumably, is just the beginning and the implications are potentially large:

Some may doubt whether the accuracies reported here are high enough to cause concern. Yet, our estimates unlikely constitute an upper limit of what is possible. Higher accuracy would likely be enabled by using multiple images per person; using images of a higher resolution; training custom neural networks aimed specifically at political orientation; or including non-facial cues such as hairstyle, clothing, headwear, or image background. Moreover, progress in computer vision and artificial intelligence is unlikely to slow down anytime soon. Finally, even modestly accurate predictions can have tremendous impact when applied to large populations in high-stakes contexts, such as elections. For example, even a crude estimate of an audience’s psychological traits can drastically boost the efficiency of mass persuasion35

Facial recognition is already in use by law enforcement and authorities. Clearview AI for example has (secretly) created a data base of millions of pictures that they make accessible to police departments. Activists have started to fight back and started to create a data base to identify police officers during protests using facial recognition. I’m really curious whether we will see a restriction or ban of facial recognition technology anytime in the future.